Compute Flow

This page shows how you run a compute flow.

1. Alice publishes dataset

# Publish data NFT, datatoken, and asset for dataset based on url

# ocean.py offers multiple file object types. A simple url file is enough for here

from ocean_lib.structures.file_objects import UrlFile

DATA_url_file = UrlFile(

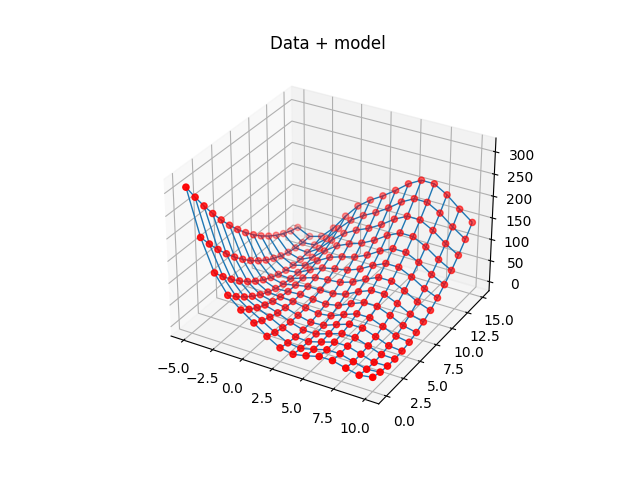

url="https://raw.githubusercontent.com/oceanprotocol/c2d-examples/main/branin_and_gpr/branin.arff"

)

name = "Branin dataset"

(DATA_data_nft, DATA_datatoken, DATA_ddo) = ocean.assets.create_url_asset(name, DATA_url_file.url, {"from": alice}, with_compute=True, wait_for_aqua=True)

print(f"DATA_data_nft address = '{DATA_data_nft.address}'")

print(f"DATA_datatoken address = '{DATA_datatoken.address}'")

print(f"DATA_ddo did = '{DATA_ddo.did}'")2. Alice publishes an algorithm

3. Alice allows the algorithm for C2D for that data asset

4. Bob acquires datatokens for data and algorithm

5. Bob starts a compute job using a free C2D environment

6. Bob monitors logs / algorithm output

Appendix. Tips & tricks

Last updated

Was this helpful?