# Mandatory environment variables

OCEAN_NETWORK=mainnet

OCEAN_NETWORK_URL=<replace this>

PRIVATE_KEY=<secret>

# Optional environment variables

AQUARIUS_URL=https://v4.aquarius.oceanprotocol.com/

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Ocean was founded to level the playing field for AI and data.

To dive deeper, see this blog or this video.

Next: What is Ocean?

Back: Discover Ocean: main

Ocean's mission is to level the playing field for AI and data.

How? By helping you monetize AI models, compute and data, while preserving privacy.

Ocean is a decentralized data & compute protocol built to scale AI. Its core tech is:

Data NFTs & datatokens, to enable token-gated access control, data wallets, data DAOs, and more.

Compute-to-data: buy & sell private data, while preserving privacy

Learn how Ocean Protocol transforms data sharing and monetization with its powerful Web3 open source tools.

Follow the step-by-step instructions for a no-code solution to unleash the power of Ocean Protocol technologies!

Find APIs, libraries, and other tools to build awesome dApps or integrate with the Ocean Protocol ecosystem.

Earn $ from AI models, track provenance, get more data.

Run AI-powered prediction bots or trading bots to earn $.

Earn OCEAN rewards by predicting (and more streams to come)

For software architects and developers - deploy your own components in Ocean Protocol ecosystem.

Get involved! Learn how to contribute to Ocean Protocol.

Ocean Nodes: Monetizing globalized idle compute & turning it into a decentralized network, turning unused computing resources into a secure, scalable, and privacy-preserving infrastructure for AI training, inference, and data processing.

Developers. Build token-gated AI dApps, or run an Ocean Node.

Data scientists. Earn via predictions, annotations & challenges

Ocean Node Runners. Monetize your idle computing hardware

Next: Why Ocean?

Back: Docs main

Token migration between two blockchain networks.

Since Ocean’s departure from the ASI Alliance, the $OCEAN token is an ERC20 token solely representing the ideals of decentralized AI and data.

It has no intended utility value nor is it a staking, platform, governance, payment, NFT, DeFi, meme, reward, or security token.

Its supply is capped at approximately 270,000,000. With buybacks and burns, the supply of $OCEAN will be decreasing over time.

Acquirors can currently exchange for $OCEAN on Coinbase, Kraken, UpBit, Binance US, Uniswap and SushiSwap. Until 2024, the Ocean Token ($OCEAN) was the utility token powering the Ocean Protocol ecosystem, used for staking, governance, and purchasing data services, enabling secure, transparent, and decentralized data exchange and monetization.

For more info, navigate to this section of our official website.

Next: Networks

Learn the blockchain concepts behind Ocean

You'll need to know a thing or two about blockchains to understand Ocean Protocol's tech... Let's get started with the basics 🧑🏫

Blockchain is a revolutionary technology that enables the decentralized nature of Ocean. At its core, blockchain is a distributed ledger that securely records and verifies transactions across a network of computers. It operates on the following key concepts that ensure trust and immutability:

Guides to use Ocean, with no coding needed.

Contents:

Basic concepts

Using wallets

Host assets

Specification of decentralized identifiers for assets in Ocean Protocol using the DID & DDO standards.

In Ocean, we use decentralized identifiers (DIDs) to identify your asset within the network. Decentralized identifiers (DIDs) are a type of identifier that enables verifiable, decentralized digital identity. In contrast to typical, centralized identifiers, DIDs have been designed so that they may be decoupled from centralized registries, identity providers, and certificate authorities. Specifically, while other parties might be used to help enable the discovery of information related to a DID, the design enables the controller of a DID to prove control over it without requiring permission from any other party. DIDs are URIs that associate a DID subject with a DID document allowing trustable interactions associated with that subject.

Let's remember the interaction with Ocean's stack components for DDO publishing flow!

For this particular flow, we selected as consumer - Ocean CLI. To explore more details regarding Ocean CLI usage, kindly check .

In this context, we address the following sequence diagram along with the explanations.

Asset Creation Begins

The End User initiates the process by running the command: npm run publish

The process of consuming an asset is straightforward. To achieve this, you only need to execute a single command:

In this command, replace assetDID with the specific DID of the asset you want to consume, and download-location-path with the desired path where you wish to store the downloaded asset content

Once executed, this command orchestrates both the ordering of a and the subsequent download operation. The asset's content will be automatically retrieved and saved at the specified location, simplifying the consumption process for users.

Decentralization: Blockchain eliminates the need for intermediaries by enabling a peer-to-peer network where transactions are validated collectively. This decentralized structure reduces reliance on centralized authorities, enhances transparency, and promotes a more inclusive data economy.

Immutability: Once a transaction is recorded on the blockchain, it becomes virtually impossible to alter or tamper with. The data is stored in blocks, which are cryptographically linked together, forming an unchangeable chain of information. Immutability ensures the integrity and reliability of data, providing a foundation of trust in the Ocean ecosystem. Furthermore, it enables reliable traceability of historical transactions.

Consensus Mechanisms: Blockchain networks employ consensus mechanisms to validate and agree upon the state of the ledger. These mechanisms ensure that all participants validate transactions without relying on a central authority, crucially maintaining a reliable view of the blockchain's history. The consensus mechanisms make it difficult for malicious actors to manipulate the blockchain's history or conduct fraudulent transactions. Popular consensus mechanisms include Proof of Work (PoW) and Proof of Stake (PoS).

Ocean harnesses the power of blockchain to facilitate secure and auditable data exchange. This ensures that data transactions are transparent, verifiable, and tamper-proof. Here's how Ocean uses blockchains:

Data Asset Representation: Data assets in Ocean are represented as non-fungible tokens (NFTs) on the blockchain. NFTs provide a unique identifier for each data asset, allowing for seamless tracking, ownership verification, and access control. Through NFTs and datatokens, data assets become easily tradable and interoperable within the Ocean ecosystem.

Smart Contracts: Ocean uses smart contracts to automate and enforce the terms of data exchange. Smart contracts act as self-executing agreements that facilitate the transfer of data assets between parties based on predefined conditions - they are the exact mechanisms of decentralization. This enables cyber-secure data transactions and eliminates the need for intermediaries.

Tamper-Proof Audit Trail: Every data transaction on Ocean is recorded on the blockchain, creating an immutable and tamper-proof audit trail. This ensures the transparency and traceability of data usage, providing data scientists with a verifiable record of the data transaction history. Data scientists can query addresses of data transfers on-chain to understand data usage.

By integrating blockchain technology, Ocean establishes a trusted infrastructure for data exchange. It empowers individuals and organizations to securely share, monetize, and leverage data assets while maintaining control and privacy.

The Consumer then calls ocean.js, which handles the asset creation logic.

Smart Contract Deployment

Ocean.js interacts with the Smart Contracts to deploy: Data NFT, Datatoken, pricing schema such as Dispenser from free assets and Fixed Rate Exchange for priced assets.

Once deployed, the smart contracts emit the NFTCreated and DatatokenCreated events (and additionally DispenserCreated and FixedRateCreated for pricing schema deployments).

Ocean.js listens to these events and checks the datatoken template. If it is template 4, then no encryption is needed for service files, because template 4 contract of ERC20 is used on top of credential EVM chains, which already encrypt the information on-chain, e.g. Sapphire Testnet. Otherwise, service files need to be encrypted by Ocean Node's dedicated handler.

DDO Validation

Ocean.js requests Ocean Node to validate the DDO structure against the SHACL schemas, depending on DDO version. For this task, Ocean Node uses util functions from DDO.js library which is out dedicated tool for DDO interactions.

✅ If Validation Succeeds:

Ocean.js can call setMetadata on-chain and then returns the DID to the Consumer, which is passed back to the End User. The DID gets indexed in parallel, because Ocean Node listens through Indexer to blockchain events, including MetadataCreated and the DDO will be processed and stored within Ocean Node's Database.

❌ If Validation Fails: Ocean Node logs the issue and responds to Ocean.js with an error status and asset creation halts here.

Regarding publishing new datasets through consumer, Ocean CLI, please consult this dedicated section.

Let's dive in!

For blockchain beginners

If you have OCEAN in old pools, this will help.

npm run cli download 'assetDID' 'download-location-path'

DIDs in Ocean follow the generic DID scheme, they look like this:

The part after did:op: is the ERC721 contract address(in checksum format) and the chainId (expressed to 10 decimal places). The following javascript example shows how to calculate the DID for the asset:

Before creating a DID you should first publish a data NFT, we suggest reading the following sections so you are familiar with the process:

did:op:0ebed8226ada17fde24b6bf2b95d27f8f05fcce09139ff5cec31f6d81a7cd2eaCLI tool to interact with the oceanprotocol's JavaScript library to privately & securely publish, consume and run compute on data.

Welcome to the Ocean CLI, your powerful command-line tool for seamless interaction with Ocean Protocol's data-sharing capabilities. 🚀

The Ocean CLI offers a wide range of functionalities, enabling you to:

Publish 📤 data services: downloadable files or compute-to-data.

Edit ✏️ existing assets.

📥 data services, ordering datatokens and downloading data.

💻 on public available datasets using a published algorithm. Free version of compute-to-data feature is available

The Ocean CLI is powered by the JavaScript library, an integral part of the toolset. 🌐

Let's dive into the CLI's capabilities and unlock the full potential of Ocean Protocol together! If you're ready to explore each functionality in detail, simply go through the next pages.

Aquarius is a tool that tracks and caches the metadata from each chain where the Ocean Protocol smart contracts are deployed. It operates off-chain, running an Elasticsearch database. This makes it easy to query the metadata generated on-chain.

The core job of Aquarius is to continually look out for new metadata being created or updated on the blockchain. Whenever such events occur, Aquarius takes note of them, processes this information, and adds it to its database. This allows it to keep an up-to-date record of the metadata activity on the chains.

Aquarius has its own interface (API) that allows you to easily query this metadata. With Aquarius, you don't need to do the time-consuming task of scanning the data chains yourself. It offers you a convenient shortcut to the information you need. It's ideal for when you need a search feature within your dApp.

Acts as a cache: It stores metadata from multiple blockchains in off-chain in an Elasticsearch database.

Monitors events: It continually checks for MetadataCreated and MetadataUpdated events, processing these events and updating them in the database.

Offers easy query access: The Aquarius API provides a convenient method to access metadata without needing to scan the blockchain.

Serves as an API: It provides a REST API that fetches data from the off-chain datastore.

We recommend checking the README in the for the steps to run the Aquarius. If you see any errors in the instructions, please open an issue within the GitHub repository.

Python: This is the main programming language used in Aquarius.

Flask: This Python framework is used to construct the Aquarius API.

Elasticsearch: This is a search and analytics engine used for efficient data indexing and retrieval.

REST API: Aquarius uses this software architectural style for providing interoperability between computer systems on the internet.

Click to explore the documentation and more examples in postman.

Exploring fractional ownership in Web3, combining NFTs and DeFi for co-ownership of data IP and tokenized DAOs for collective data management.

Fractional ownership represents an exciting subset within the realm of Web3, combining the realms of NFTs and DeFi. It introduces the concept of co-owning data intellectual property (IP).

Ocean offers two approaches to facilitate fractional ownership:

Sharded Holding of ERC20 Datatokens: Under this approach, each holder of ERC20 tokens possesses the typical datatoken rights outlined earlier. For instance, owning 1.0 datatoken allows consumption of a particular asset. Ocean conveniently provides this feature out of the box.

Sharding ERC721 Data NFT: This method involves dividing the ownership of an ERC721 data NFT among multiple individuals, granting each co-owner the right to a portion of the earnings generated from the underlying IP. Moreover, these co-owners collectively control the data NFT. For instance, a dedicated DAO may be established to hold the data NFT, featuring its own ERC20 token. DAO members utilize their tokens to vote on updates to data NFT roles or the deployment of ERC20 datatokens associated with the ERC721.

It's worth noting that for the second approach, one might consider utilizing platforms like Niftex for sharding. However, important questions arise in this context:

What specific rights do shard-holders possess?

It's possible that they have limited rights, just as Amazon shareholders don't have the authority to roam the hallways of Amazon's offices simply because they own shares

Additionally, how do shard-holders exercise control over the data NFT?

These concerns are effectively addressed by employing a tokenized DAO, as previously described.

Data DAOs present a fascinating use case whenever a group of individuals desires to collectively manage data or consolidate data for increased bargaining power. Such DAOs can take the form of unions, cooperatives, or trusts.

Consider the following example involving a mobile app: You install the app, which includes an integrated crypto wallet. After granting permission for the app to access your location data, it leverages the DAO to sell your anonymized location data on your behalf. The DAO bundles your data with that of thousands of other DAO members, and as a member, you receive a portion of the generated profits.

This use case can manifest in several variations. Each member's data feed could be represented by their own data NFT, accompanied by corresponding datatokens. Alternatively, a single data NFT could aggregate data feeds from all members into a unified feed, which is then fractionally owned through sharded ERC20 tokens (as described in approach 1) or by sharding the ERC721 data NFT (as explained in approach 2). If you're interested in establishing a data union, we recommend reaching out to our associates at .

To make changes to a dataset, you'll need to start by retrieving the asset's Decentralized Data Object (DDO).

Obtaining the DDO of an asset is a straightforward process. You can accomplish this task by executing the following command:

npm run cli getDDO 'assetDID'After retrieving the asset's DDO and saving it as a JSON file, you can proceed to edit the metadata as needed. Once you've made the necessary changes, you can utilize the following command to apply the updated metadata:

With the Uploader UI, users can effortlessly upload their files and obtain a unique hash or CID (Content Identifier) for each uploaded asset to use on the Marketplace.

Step 1: Copy the hash or CID from your upload.

Step 2: Open the Ocean Marketplace. Go to publish and fill in all the information for your dataset.

Step 3: When selecting the file to publish, open the hosting provider (e.g. "Arweave" tab)

Step 4: Paste the hash you copied earlier.

Step 5: Click on "VALIDATE" to ensure that your file gets validated correctly.

This feature not only simplifies the process of storing and managing files but also seamlessly integrates with the Ocean Marketplace. Once your file is uploaded via Uploader UI, you can conveniently use the generated hash or CID to interact with your assets on the Ocean Marketplace, streamlining the process of sharing, validating, and trading your digital content.

Ocean Protocol is now using Ocean Nodes for all backend infrastructure. Previously we used these three components:

Aquarius: Aquarius is a metadata cache used to enhance search efficiency by caching on-chain data into Elasticsearch. By accelerating metadata retrieval, Aquarius enables faster and more efficient data discovery.

Provider: The Provider component was used to facilitate various operations within the ecosystem. It assists in asset downloading, handles DDO (Decentralized Data Object) encryption, and establishes communication with the operator-service for Compute-to-Data jobs. This ensures secure and streamlined interactions between different participants.

: The Subgraph is an off-chain service that utilizes GraphQL to offer efficient access to information related to datatokens, users, and balances. By leveraging the subgraph, data retrieval becomes faster compared to an on-chain query. This enhances the overall performance and responsiveness of applications that rely on accessing this information.

How to set up a MetaMask wallet on Chrome

Before you can publish or purchase assets, you will need a crypto wallet. As Metamask is one of the most popular crypto wallets around, we made a tutorial to show you how to get started with Metamask to use Ocean's tech.

MetaMask can be connected with a TREZOR or Ledger hardware wallet but we don't cover those options below; see .

How to use centralized hosting with Azure Cloud for your NFT assets

Azure provides various options to host data and multiple configuration possibilities. Publishers are required to do their research and decide what would be the right choice. The below steps provide one of the possible ways to host data using Azure storage and publish it on Ocean Marketplace.

Prerequisite

Create an account on . Users might also be asked to provide payment details and billing addresses that are out of this tutorial's scope.

Step 1 - Create a storage account

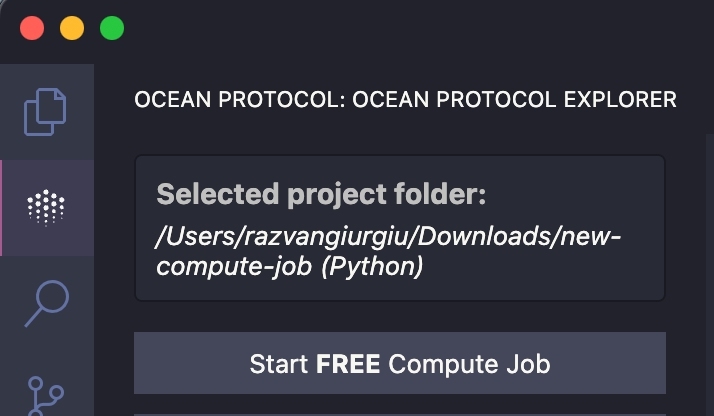

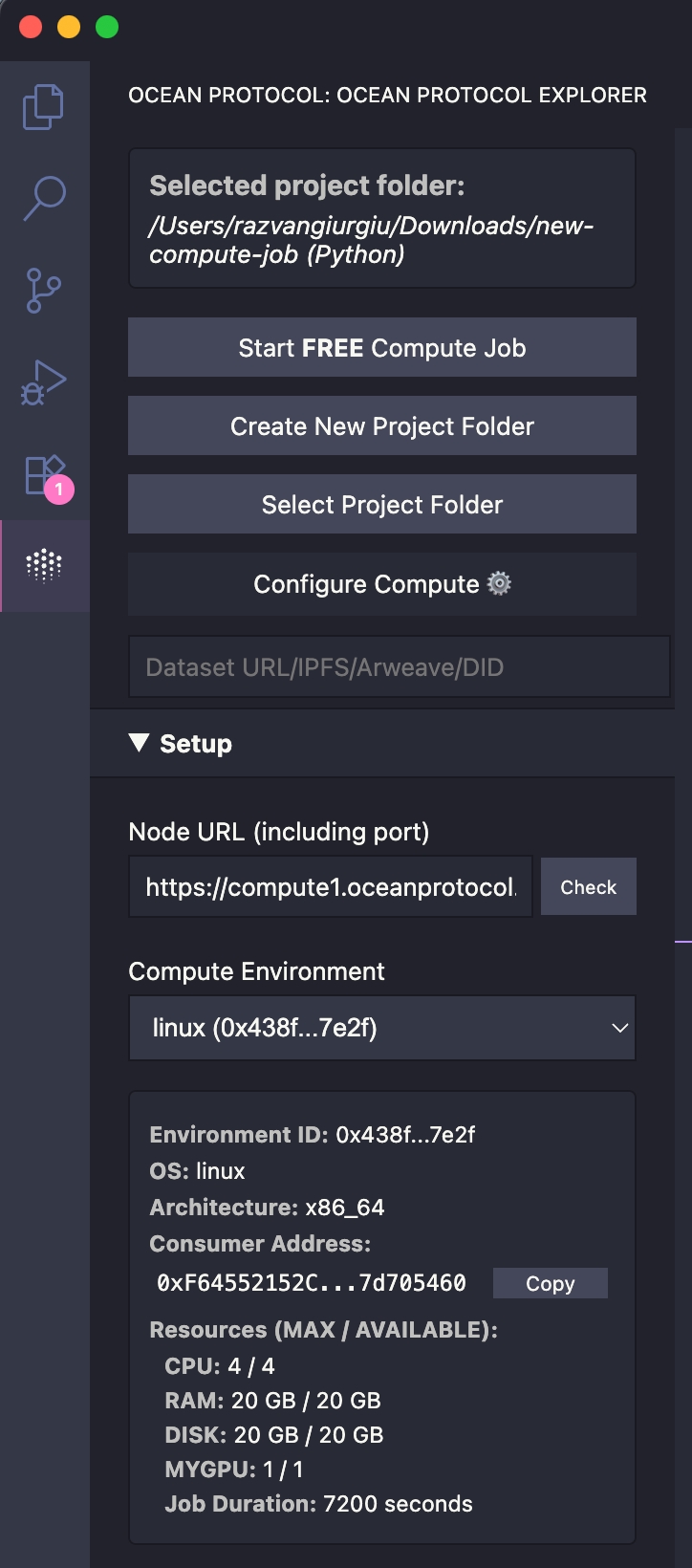

Run compute jobs on Ocean Protocol directly from VS Code. The extension automatically detects your active algorithm file and streamlines job submission, monitoring, and results retrieval. Simply open a python or javascript file and click Start Compute Job. You can install the extension from

Once installed, the extension adds an Ocean Protocol section to your VSCode workspace. Here you can configure your compute settings and run compute jobs using the currently active algorithm file.

How to use Google Storage for your NFT assets

Google Storage

Google Cloud Storage is a scalable and reliable object storage service provided by Google Cloud. It allows you to store and retrieve large amounts of unstructured data, such as files, with high availability and durability. You can organize your data in buckets and benefit from features like access control, encryption, and lifecycle management. With various storage classes available, you can optimize cost and performance based on your data needs. Google Cloud Storage integrates seamlessly with other Google Cloud services and provides APIs for easy integration and management.

Prerequisite

Create an account on . Users might also be asked to provide payment details and billing addresses that are out of this tutorial's scope.

Step 1 - Create a storage account

Go to

In the Google Cloud console, go to the Cloud Storage Buckets page

How to use decentralized hosting for your NFT assets

Enhance the efficiency of your file uploads by leveraging the simplicity of the storage system for Arweave. Dive into our comprehensive guide to discover detailed steps and tips, ensuring a smooth and hassle-free uploading process. Your experience matters, and we're here to make it as straightforward as possible.

Liquidity pools and dynamic pricing used to be supported in previous versions of the Ocean Market. However, these features have been deprecated and now we advise everyone to remove their liquidity from the remaining pools. It is no longer possible to do this via Ocean Market, so please follow this guide to remove your liquidity via etherscan.

🧑🏽💻 Local Development Environment for Ocean Protocol

The Barge component of Ocean Protocol is a powerful tool designed to simplify the development process by providing Docker Compose files for running the full Ocean Protocol stack locally. It allows developers to set up and configure the various services required by Ocean Protocol for local testing and development purposes.

By using the Barge component, developers can spin up an environment that includes default versions of , , , and . Additionally, it deploys all the from the ocean-contracts repository, ensuring a complete and functional local setup. Barge component also starts additional services like , which is a local blockchain simulator used for smart contract development, and , a powerful search and analytics engine required by Aquarius for efficient indexing and querying of data sets. A full list of components and exposed ports is available in the GitHub .

To explore all the available options and gain a deeper understanding of how to utilize the Barge component, you can visit the official GitHub of Ocean Protocol.

By utilizing the Barge component, developers gain the freedom to conduct experiments, customize, and fine-tune their local development environment, and offers the flexibility to override the Docker image tag associated with specific components. By setting the appropriate environment variable before executing the start_ocean.sh command, developers can customize the versions of various components according to their requirements. For instance, developers can modify the:

The DDO validation within the DDO.js library is performed based on SHACL schemas which enforce DDO fields types and structure based on DDO version.

NOTE: For more information regarding DDO structure, please consult .

The above diagram depicts the high level flow of Ocean core stack interaction for DDO validation using DDO.js, which will be called by Ocean Node whenever a new DDO is to be published.

Based on the DDO version, ddo.js will apply the corresponding SHACL schema to validate DDO fields against it.

Supported SHACL schemas can be found .

NOTE: For DDO validation, indexedMetadata will not be taken in consideration in this process.

ERC20 datatokens represent licenses to access the assets.

Fungible tokens are a type of digital asset that are identical and interchangeable with each other. Each unit of a fungible token holds the same value and can be exchanged on a one-to-one basis. This means that one unit of a fungible token is indistinguishable from another unit of the same token. Examples of fungible tokens include cryptocurrencies like Bitcoin (BTC) and Ethereum (ETH), where each unit of the token is equivalent to any other unit of the same token. Fungible tokens are widely used for transactions, trading, and as a means of representing value within blockchain-based ecosystems.

Datatokens are fundamental within Ocean Protocol, representing a key mechanism to access data assets in a decentralized manner. In simple terms, a datatoken is an ERC20-compliant token that serves as access control for a data/service represented by a

Ocean Protocol's JavaScript library to manipulate with DDO and Asset fields and to validate DDO structures depending on version.

Welcome to the DDO.js! Your utility library for working with DDOs and Assets like a pro. 🚀

The DDO.js offers a wide range of functionalities, enabling you to:

📤 by DDOManager depending on version.

📥 DDO data together with Asset fields using defined helper methods.

To edit fields in the DDO structure, DDO instance from DDOManager is required to call updateFields method which is present for all types of DDOs, but targets specific DDO fields, according to DDO's version.

NOTE: There are some restrictions that need to be taken care of before updating fields which do not exist for certain DDO.

For e.g. deprecatedDDO, the update on services key is not supported, because a deprecatedDDO is not supposed to store services information. It is design to support only: id, nftAddress, chainId

How to host your data and algorithm NFT assets like a champ 🏆 😎

The most important thing to remember is that wherever you host your asset... it needs to be reachable & downloadable. It cannot live behind a private firewall such as a private Github repo. You need to use a proper hosting service!

The URL to your asset is encrypted in the publishing process!

If you want to publish cool things on the Ocean Marketplace, then you'll first need a place to host your assets as Ocean doesn't store data; you're responsible for hosting it on your chosen service and providing the necessary details for publication. You have SO many options where to host your asset including centralized and decentralized storage systems. Places to host may include: Github, IPFS, Arweave, AWS, Azure, Google Cloud, and your own personal home server (if that's you, then you probably don't need a tutorial on hosting assets). Really, anywhere with a downloadable link to your asset is fine.

An integral part of the Ocean Protocol stack

It is a REST API designed specifically for the provision of data services. It essentially acts as a proxy that encrypts and decrypts the metadata and access information for the data asset.

Constructed using the Python Flask HTTP server, the Provider service is the only component in the Ocean Protocol stack with the ability to access your data, it is an important layer of security for your information.

The Provider service has several key functions. Firstly, it performs on-chain checks to ensure the buyer has permission to access the asset. Secondly, it encrypts the URL and metadata during the publication phase, providing security for your data during the initial upload.

The Provider decrypts the URL when a dataset is downloaded and it streams the data directly to the buyer, it never reveals the asset URL to the buyer. This provides a layer of security and ensures that access is only provided when necessary.

Datatokens enable data assets to be tokenized, allowing them to be easily traded, shared, and accessed within the Ocean Protocol ecosystem. Each datatoken is associated with a particular data asset, and its value is derived from the underlying dataset's availability, scarcity, and demand.

By using datatokens, data owners can retain ownership and control over their data while still enabling others to access and utilize it based on predefined license terms. These license terms define the conditions under which the data can be accessed, used, and potentially shared by data consumers.

Each datatoken represents a sub-license from the base intellectual property (IP) owner, enabling users to access and consume the associated dataset. The license terms can be set by the data NFT owner or default to a predefined "good default" license. The fungible nature of ERC20 tokens aligns perfectly with the fungibility of licenses, facilitating seamless exchangeability and interoperability between different datatokens.

By adopting the ERC20 standard for datatokens, Ocean Protocol ensures compatibility and interoperability with a wide array of ERC20-based wallets, decentralized exchanges (DEXes), decentralized autonomous organizations (DAOs), and other blockchain-based platforms. This standardized approach enables users to effortlessly transfer, purchase, exchange, or receive datatokens through various means such as marketplaces, exchanges, or airdrops.

Data owners and consumers can engage with datatokens in numerous ways. Datatokens can be acquired through transfers or obtained by purchasing them on dedicated marketplaces or exchanges. Once in possession of the datatokens, users gain access to the corresponding dataset, enabling them to utilize the data within the boundaries set by the associated license terms.

Once someone has generated datatokens, they can be used in any ERC20 exchange, centralized or decentralized. In addition, Ocean provides a convenient default marketplace that is tuned for data: Ocean Market. It’s a vendor-neutral reference data marketplace for use by the Ocean community.

You can publish a data NFT initially with no ERC20 datatoken contracts. This means you simply aren’t ready to grant access to your data asset yet (sub-license it). Then, you can publish one or more ERC20 datatoken contracts against the data NFT. One datatoken contract might grant consume rights for 1 day, another for 1 week, etc. Each different datatoken contract is for different license terms.

AQUARIUS_VERSIONPROVIDER_VERSIONCONTRACTS_VERSIONRBAC_VERSIONELASTICSEARCH_VERSION⚠️ We've got an important heads-up about Barge that we want to share with you. Brace yourself, because Barge is not for the faint-hearted! Here's the deal: the barge works great on Linux, but we need to be honest about its limitations on macOS. And, well, it doesn't work at all on Windows. Sorry, Windows users!

To make things easier for everyone, we strongly recommend giving a try first on a testnet. Everything is configured already so it should be sufficient for your needs as well. Visit the networks page to have clarity on the available test networks. ⚠️

Features an EventsMonitor: This component runs continually to retrieve and index chain Metadata, saving results into an Elasticsearch database.

Configurable components: The EventsMonitor has customizable features like the MetadataContract, Decryptor class, allowed publishers, purgatory settings, VeAllocate, start blocks, and more.

Datasets and Algorithms

Compute-to-Data introduces a paradigm where datasets remain securely within the premises of the data holder, ensuring strict data privacy and control. Only authorized algorithms are granted access to operate on these datasets, subject to specific conditions, within a secure and isolated environment. In this context, algorithms are treated as valuable assets, comparable to datasets, and can be priced accordingly. This approach enables data holders to maintain control over their sensitive data while allowing for valuable computations to be performed on them, fostering a balanced and secure data ecosystem.

To define the accessibility of algorithms, their classification as either public or private can be specified by setting the attributes.main.type value in the Decentralized Data Object (DDO):

"access" - public. The algorithm can be downloaded, given appropriate datatoken.

"compute" - private. The algorithm is only available to use as part of a compute job without any way to download it. The Algorithm must be published on the same Ocean Provider as the dataset it's targeted to run on.

This flexibility allows for fine-grained control over algorithm usage, ensuring data privacy and enabling fair pricing mechanisms within the Compute-to-Data framework.

For each dataset, Publishers have the flexibility to define permission levels for algorithms to execute on their datasets, offering granular control over data access.

There are several options available for publishers to configure these permissions:

allow selected algorithms, referenced by their DID

allow all algorithms published within a network or marketplace

allow raw algorithms, for advanced use cases circumventing algorithm as an asset type, but most prone to data escape

All implementations default to private, meaning that no algorithms are allowed to run on a compute dataset upon publishing. This precautionary measure helps prevent data leakage by thwarting rogue algorithms that could be designed to extract all data from a dataset. By establishing private permissions as the default setting, publishers ensure a robust level of protection for their data assets and mitigate the risk of unauthorized data access.

npm run cli editAsset 'DATASET_DID' 'PATH_TO_UPDATED_FILE`

Now let's use DDO V4 example, DDOExampleV4 into the following javascript code, assuming @oceanprotocol/ddo-js has been installed as dependency before:

Execute script

node validate-ddo.jsValidate DDO 📤 using SHACL schemas.

Edit ✏️ existing fields of DDO and Asset.

It is available as npm package, therefore to install in your js project, simply run in the console:

Let's dive into the DDO.js's capabilities together! If you're ready to explore each functionality in detail, simply go through the next pages.

npm install @oceanprotocol/ddo-jsindexedMetadata.nft.stateSupported fields to be updated are:

Now let's use DDO V4 example, DDOExampleV4 into the following javascript code, assuming @oceanprotocol/ddo-js has been installed as dependency before:

Execute script

export interface UpdateFields {

id?: string;

nftAddress?: string;

chainId?: number;

datatokens?: AssetDatatoken[];

indexedMetadata?: IndexedMetadata;

services?: ServiceV4[] | ServiceV5[];

issuer?: string;

proof?: Proof;

}const { DDOManager } = require ('@oceanprotocol/ddo-js');

const ddoInstance = DDOManager.getDDOClass(DDOExampleV4);

const nftAddressToUpdate = "0xfF4AE9869Cafb5Ff725f962F3Bbc22Fb303A8aD8"

ddoInstance.updateFields({ nftAddress: nftAddressToUpdate }) // It supports update on multiple fields

// The same script can be applied on DDO V5 and deprecated DDO from `Instantiate DDO section`.const { DDOManager } = require ('@oceanprotocol/ddo-js');

const ddoInstance = DDOManager.getDDOClass(DDOExampleV4);

const validation = await ddoInstance.validate();

console.log('Validation true/false: ' + validation[0]);

console.log('Validation message: ' + validation[1]);node update-ddo-fields.jsGo to the Chrome Web Store for extensions and search for MetaMask.

Install MetaMask. The wallet provides a friendly user interface that will help you through each step. MetaMask gives you two options: importing an existing wallet or creating a new one. Choose to Create a Wallet:

In the next step create a new password for your wallet. Read through and accept the terms and conditions. After that, MetaMask will generate Secret Backup Phrase for you. Write it down and store it in a safe place.

Continue forward. On the next page, MetaMask will ask you to confirm the backup phrase. Select the words in the correct sequence:

Voila! Your account is now created. You can access MetaMask via the browser extension in the top right corner of your browser.

You can now manage ETH and OCEAN with your wallet. You can copy your account address to the clipboard from the options. When you want someone to send ETH or OCEAN to you, you will have to give them that address. It's not a secret.

You can also watch this video tutorial if you want more help setting up MetaMask.

Sometimes it is required to use custom or external networks in MetaMask. We can add a new one through MetaMask's Settings.

Open the Settings menu and find the Networks option. When you open it, you'll be able to see all available networks your MetaMask wallet currently use. Click the Add Network button.

There are a few empty inputs we need to fill in:

Network Name: this is the name that MetaMask is going to use to differentiate your network from the rest.

New RPC URL: to operate with a network we need an endpoint (RPC). This can be a public or private URL.

Chain Id: each chain has an Id

Currency Symbol: it's the currency symbol MetaMask uses for your network

Block Explorer URL: MetaMask uses this to provide a direct link to the network block explorer when a new transaction happens

When all the inputs are filled just click Save. MetaMask will automatically switch to the new network.

Go to Azure portal

Go to the Azure portal: https://portal.azure.com/#home and select Storage accounts as shown below.

Create a new storage account

Fill in the details

Storage account created

Step 2 - Create a blob container

Step 3 - Upload a file

Step 4 - Share the file

Select the file to be published and click Generate SAS

Configure the SAS details and click Generate SAS token and URL

Copy the generated link

Step 5 - Publish the asset using the generated link

Now, copy and paste the link into the Publish page in the Ocean Marketplace.

Install the extension from the VS Code Marketplace

Open the Ocean Protocol panel from the activity bar

Configure your compute settings:

Node URL (pre-filled with default Ocean compute node)

Optional private key for your wallet

Select your files:

Algorithm file (JS or Python)

Optional dataset file (JSON)

Results folder location

Click Start Compute Job

Monitor the job status and logs in the output panel

Once completed, the results file will automatically open in VSCode

Watch our step-by-step workshop on using the Ocean Protocol VSCode Extension: Ocean VS code extension - Discord Algorithm Workshop

VS Code 1.96.0 or higher

Verify your RPC URL, Ocean Node URL, and Compute Environment URL if connections fail.

Check the output channels for detailed logs.

For further assistance, refer to the Ocean Protocol documentation or join the Discord community.

Custom Compute Node: Enter your own node URL or use the default Ocean Protocol node

Wallet Integration: Use auto-generated wallet or enter private key for your own wallet

Custom Docker Images. If you need a custom environment with your own dependencies installed, you can use a custom docker image. Default is oceanprotocol/algo_dockers (Python) or node (JavaScript)

Docker Tags: Specify version tags for your docker image (like python-branin or latest)

Algorithm: The vscode extension automatically detects open JavaScript or Python files. Or alternatively you can specify the algorithm file manually here.

Dataset: Optional JSON file for input data

Results Folder: Where computation results will be saved

Your contributions are welcomed! Please check our GitHub repository for the contribution guidelines.

Create a new bucket

Fill in the details

Allow access to your recently created Bucket

Step 2 - Upload a file

Step 3 - Change your file's access (optional)

If your bucket's access policy is restricted, on the menu on the right click on Edit access (skip this step if your bucket is publicly accessible)

Step 4 - Share the file

Open the file and copy the generated link

Step 5 - Publish the asset using the generated link

Now, copy and paste the link into the Publish page in the Ocean Marketplace.

Arweave is a global, permanent, and decentralized data storage layer that allows you to store documents and applications forever. Arweave is different from other decentralized storage solutions in that there is only one up-front cost to upload each file.

Step 1 - Get a new wallet and AR tokens

Download & save a new wallet (JSON key file) and receive a small amount of AR tokens for free using the Arweave faucet. If you already have an Arweave browser wallet, you can skip to Step 3.

At the time of writing, the faucet provides 0.02 AR which is more than enough to upload a file.

If at any point you need more AR tokens, you can fund your wallet from one of Arweave's supported exchanges.

Step 2 - Load the key file into the arweave.app web wallet

Open arweave.app in a browser. Select the '+' icon in the bottom left corner of the screen. Import the JSON key file from step 1.

Step 3 - Upload file

Select the newly imported wallet by clicking the "blockies" style icon in the top left corner of the screen. Select Send. Click the Data field and select the file you wish to upload.

The fee in AR tokens will be calculated based on the size of the file and displayed near the bottom middle part of the screen. Select Submit to submit the transaction.

After submitting the transaction, select Transactions and wait until the transaction appears and eventually finalizes. This can take over 5 minutes so please be patient.

Step 4 - Copy the transaction ID

Once the transaction finalizes, select it, and copy the transaction ID.

Step 5 - Publish the asset with the transaction ID

Go to the pool's Etherscan/Polygonscan page. You can find it by inspecting your transactions on your account's Etherscan page under Erc20 Token Txns.

Click View All and look for Ocean Pool Token (OPT) transfers. Those transactions always come from the pool contract, which you can click on.

On the pool contract page, go to Contract -> Read Contract.

4. Go to field 20. balanceOf and insert your ETH address. This will retrieve your pool share token balance in wei.

5. Copy this number as later you will use it as the poolAmountIn parameter.

6. Go to field 55. totalSupply to get the total amount of pool shares, in wei.

7. Divide the number by 2 to get the maximum of pool shares you can send in one pool exit transaction. If your number retrieved in former step is bigger, you have to send multiple transactions.

8. Go to Contract -> Write Contract and connect your wallet. Be sure to have your wallet connected to network of the pool.

9. Go to the field 5. exitswapPoolAmountIn

For poolAmountIn add your pool shares in wei

For minAmountOut use anything, like 1

Hit Write

10. Confirm transaction in Metamask

Purpose: This endpoint is used to fetch key information about the Aquarius service, including its current version, the plugin it's using, and the name of the software itself.

Here are some typical responses you might receive from the API:

200: This is a successful HTTP response code. It means the server has successfully processed the request and returns a JSON object containing the plugin, software, and version.

Example response:

Retrieves the health status of the Aquarius service.

Endpoint: GET /health

Purpose: This endpoint is used to fetch the current health status of the Aquarius service. This can be helpful for monitoring and ensuring that the service is running properly.

Here are some typical responses you might receive from the API:

200: This is a successful HTTP response code. It means the server has successfully processed the request and returns a message indicating the health status. For example, "Elasticsearch connected" indicates that the Aquarius service is able to connect to Elasticsearch, which is a good sign of its health.

Curl Example

Retrieves the Swagger specification for the Aquarius service.

Endpoint: GET /spec

Purpose: This endpoint is used to fetch the Swagger specification of the Aquarius service. Swagger is a set of rules (in other words, a specification) for a format describing REST APIs. This endpoint returns a document that describes the entire API, including the available endpoints, their methods, parameters, and responses.

Here are some typical responses you might receive from the API:

200: This is a successful HTTP response code. It means the server has successfully processed the request and returns the Swagger specification.

Currently, we support Arweave and IPFS. We may support other storage options in the future.

Ready to dive into the world of decentralized storage with Ocean Uploader? Let's get started:

Woohoo 🎉 You did it! You now have an IPFS CID for your asset. Pop over to https://ipfs.oceanprotocol.com/ipfs/{CID} to admire your handiwork, you'll be able to access your file at that link. You can use it to publish your asset on Ocean Market.

In this section, we'll walk you through three options to store your assets: Arweave (decentralized storage), AWS (centralized storage), and Azure (centralized storage). Let's goooooo!

Read on, if you are interested in the security details!

When you publish your asset as an NFT, then the URL/TX ID/CID required to access the asset is encrypted and stored as a part of the NFT's DDO on the blockchain. Buyers don't have access directly to this information, but they interact with the Provider, which decrypts the DDO and acts as a proxy to serve the asset.

We recommend implementing a security policy that allows only the Provider's IP address to access the file and blocks requests from other unauthorized actors is recommended. Since not all hosting services provide this feature, you must carefully consider the security features while choosing a hosting service.

Please use a proper hosting solution to keep your files. Systems like Google Drive are not specifically designed for this use case. They include various virus checks and rate limiters that prevent the Providerdownloading the asset once it was purchased.

Additionally, the Provider service offers compute services by establishing a connection to the C2D environment. This enables users to compute and manipulate data within the Ocean Protocol stack, adding a new level of utility and function to this data services platform.

The only component that can access your data

Performs checks on-chain for buyer permissions and payments

Encrypts the URL and metadata during publish

Decrypts the URL when the dataset is downloaded or a compute job is started

Provides access to data assets by streaming data (and never the URL)

Provides compute services (connects to C2D environment)

Typically run by the Data owner

In the publishing process, the provider plays a crucial role by encrypting the DDO using its private key. Then, the encrypted DDO is stored on the blockchain.

During the consumption flow, after a consumer obtains access to the asset by purchasing a datatoken, the provider takes responsibility for decrypting the DDO and fetching data from the source used by the data publisher.

Python: This is the main programming language used in Provider.

Flask: This Python framework is used to construct the Provider API.

HTTP Server: Provider responds to HTTP requests from clients (like web browsers), facilitating the exchange of data and information over the internet.

We recommend checking the README in the Provider GitHub repository for the steps to run the Provider. If you see any errors in the instructions, please open an issue within the GitHub repository.

The following pages in this section specify the endpoints for Ocean Provider that have been implemented by the core developers.

For inspecting the errors received from Provider and their reasons, please revise this document.

Ocean Nodes are the core infrastructure component within the Ocean Protocol ecosystem, designed to facilitate decentralized data exchange and management. It operates by leveraging a multi-layered architecture that includes network, components, and module layers.

Key features include secure peer-to-peer communication via libp2p, flexible and secure encryption solutions, and support for various Compute-to-Data (C2D) operations.

Ocean Node's modular design allows for customization and scalability, enabling seamless integration of its core services—such as the Indexer for metadata management and the Provider for secure data transactions—ensuring robust and efficient decentralized data operations.

The Node stack is divided into the following layers:

Network layer (libp2p & HTTP API)

Components layer (Indexer, Provider)

Modules layer

libp2p supports ECDSA key pairs, and node identity should be defined as a public key.

Multiple ways of storing URLs:

Choose one node and use that private key to encrypt URLs (enterprise approach).

Choose several nodes, so your files can be accessed even if one node goes down (given at least one node is still alive).

Nodes can receive user requests in two ways:

HTTP API

libp2p from another node

They are merged into a common object and passed to the appropriate component.

Nodes should be able to forward requests between them if the local database is missing objects. (Example: Alice wants to get DDO id #123 from Node A. Node A checks its local database. If the DDO is found, it is sent back to Alice. If not, Node A can query the network and retrieve the DDO from another node that has it.)

Nodes' libp2p implementation:

Should support core protocols (ping, identify, kad-dht for peering, circuit relay for connections).

For peer discovery, we should support both mDNS & Kademlia DHT.

All Ocean Nodes should subscribe to the topic: OceanProtocol. If any interesting messages are received, each node is going to reply.

An off-chain, multi-chain metadata & chain events cache. It continually monitors the chains for well-known events and caches them (V4 equivalence: Aquarius).

Features:

Monitors MetadataCreated, MetadataUpdated, MetadataState and stores DDOs in the database.

Validates DDOs according to multiple SHACL schemas. When hosting a node, you can provide your own SHACL schema or use the ones provided.

Provides proof for valid DDOs.

Monitors all transactions and events from the data token contracts. This includes minting tokens, creating pricing schema (fixed & free pricing), and orders.

Performs checks on-chain for buyer permissions and payments.

The provider is crucial in checking that all the relevant fees have been paid before the consumer is able to download the asset. See the for details on all of the different types of fees.

Encrypts the URL and metadata during publishing.

Decrypts the URL when the dataset is downloaded or a compute job is started.

For more details on the C2D V2 architecture, refer to the documentation in the repository:

Fundamental knowledge of using ERC-20 crypto wallets.

Ocean Protocol users require an ERC-20 compatible wallet to manage their OCEAN and ETH tokens. In this guide, we will provide some recommendations for different wallet options.

In the blockchain world, a wallet is a software program that stores cryptocurrencies secured by private keys to allow users to interact with the blockchain network. Private keys are used to sign transactions and provide proof of ownership for the digital assets stored on the blockchain. Wallets can be used to send and receive digital currencies, view account balances, and monitor transaction history. There are several types of wallets, including desktop wallets, mobile wallets, hardware wallets, and web-based wallets. Each type of wallet has its own unique features, advantages, and security considerations.

Easiest: Use the browser plug-in.

Still easy, but more secure: Get a or hardware wallet, and use MetaMask to interact with it.

The at oceanprotocol.com lists some other possible wallets.

When you set up a new wallet, it might generate a seed phrase for you. Store that seed phrase somewhere secure and non-digital (e.g. on paper in a safe). It's extremely secret and sensitive. Anyone with your wallet's seed phrase could spend all tokens of all the accounts in your wallet.

Once your wallet is set up, it will have one or more accounts.

Each account has several balances, e.g. an Ether balance, an OCEAN balance, and maybe other balances. All balances start at zero.

An account's Ether balance might be 7.1 ETH in the Ethereum Mainnet, 2.39 ETH in Görli testnet. You can move ETH from one network to another only with a special setup exchange or bridge. Also, you can't transfer tokens from networks holding value such as Ethereum mainnet to networks not holding value, i.e., testnets like Görli. The same is true of the OCEAN balances.

Each account has one private key and one address. The address can be calculated from the private key. You must keep the private key secret because it's what's needed to spend/transfer ETH and OCEAN (or to sign transactions of any kind). You can share the address with others. In fact, if you want someone to send some ETH or OCEAN to an account, you give them the account's address.

🧑🏽💻 Your Local Development Environment for Ocean Protocol

Functionalities of Barge

Barge offers several functionalities that enable developers to create and test the Ocean Protocol infrastructure efficiently. Here are its key components:

Aquarius

A metadata storage and retrieval service for Ocean Protocol. Allows indexing and querying of metadata.

Provider

A service that facilitates interaction between users and the Ocean Protocol network.

Ganache

Barge helps developers to get started with Ocean Protocol by providing a local development environment. With its modular and user-friendly design, developers can focus on building and testing their applications without worrying about the intricacies of the underlying infrastructure.

To use Barge, you can follow the instructions in the .

Before getting started, make sure you have the following prerequisites:

Linux or macOS operating system. Barge does not currently support Windows, but you can run it inside a Linux virtual machine or use the Windows Subsystem for Linux (WSL).

Docker installed on your system. You can download and install Docker from the . On Linux, you may need to allow non-root users to run Docker. On Windows or macOS, it is recommended to increase the memory allocated to Docker to 4 GB (default is 2 GB).

Docker Compose, which is used to manage the Docker containers. You can find installation instructions in the .

Once you have the prerequisites set up, you can clone the Barge repository and navigate to the repository folder using the command line:

The repository contains a shell script called start_ocean.sh that you can run to start the Ocean Protocol stack locally for development. To start Barge with the default configurations, simply run the following command:

This command will start the default versions of Aquarius, Provider, and Ganache, along with the Ocean contracts deployed to Ganache.

For more advanced options and customization, you can refer to the README file in the Barge repository. It provides detailed information about the available startup options, component versions, log levels, and more.

To clean up your environment and stop all the Barge-related containers, volumes, and networks, you can run the following command:

Please refer to the Barge repository's README for more comprehensive instructions, examples, and details on how to use Barge for local development with the Ocean Protocol stack.

How can you build a self sufficient project?

The intentions with all of the updates are to ensure that your project is able to become self-sufficient and profitable in the long run (if that’s your aim). We love projects that are built on top of Ocean and we want to ensure that you are able to generate enough income to keep your project running well into the future.

Do you have data that you can monetize? 🤔

Ocean introduced the new crypto primitives of “data on-ramp” and “data off-ramp” via datatokens. The publisher creates ERC20 datatokens for a dataset (on-ramp). Then, anyone can access that dataset by acquiring and sending datatokens to the publisher via Ocean handshaking (data off-ramp). As a publisher, it’s in your best interest to create and publish useful data — datasets that people want to consume — because the more they consume the more you can earn. This is the heart of Ocean utility: connecting data publishers with data consumers 🫂

The datasets can take one of many shapes. For AI use cases, they may be raw datasets, cleaned-up datasets, feature-engineered data, AI models, AI model predictions, or otherwise. (They can even be other forms of copyright-style IP such as photos, videos, or music!) Algorithms themselves may be sold as part of Ocean’s Compute-to-Data feature.

The first opportunity of data NFTs is the potential to sell the base intellectual property (IP) as an exclusive license to others. This is akin to EMI selling the Beatles’ master tapes to Universal Music: whoever owns the masters has the right to create records, CDs, and digital . It’s the same for data: as the data NFT owner you have the exclusive right to create ERC20 datatoken sub-licenses. With Ocean, this right is now transferable as a data NFT. You can sell these data NFTs in OpenSea and other NFT marketplaces.

If you’re part of an established organization or a growing startup, you’ll also love the new role structure that comes with data NFTs. For example, you can specify a different address to collect compared to the address that owns the NFT. It’s now possible to fully administer your project through these .

In short, if you have data to sell, then Ocean gives you superpowers to scale up and manage your data project. We hope this enables you to bring your data to new audiences and increase your profits.

We have always been super encouraging of anyone who wishes to build a dApp on top of Ocean or to fork Ocean Market and make their own data marketplace. And now, we have taken this to the next level and introduced more opportunities and even more fee customization options.

Ocean empowers dApp owners like yourself to have greater flexibility and control over the fees you can charge. This means you can tailor the fee structure to suit your specific needs and ensure the sustainability of your project. The smart contracts enable you to collect a fee not only in consume, but also in fixed-rate exchange, also you can set the fee value. For more detailed information regarding the fees, we invite you to visit the page.

Another new opportunity is using your own ERC20 token in your dApp, where it’s used as the unit of exchange. This is fully supported and can be a great way to ensure the sustainability of your project.

Now this is a completely brand new opportunity to start generating — running your own . We have been aware for a while now that many of you haven’t taken up the opportunity to run your own provider, and the reason seems obvious — there aren’t strong enough incentives to do so.

For those that aren’t aware, is the proxy service that’s responsible for encrypting/ decrypting the data and streaming it to the consumer. It also validates if the user is allowed to access a particular data asset or service. It’s a crucial component in Ocean’s architecture.

Now, as mentioned above, fees are now paid to the individual or organization running the provider whenever a user downloads a data asset. The fees for downloading an asset are set as a cost per MB. In addition, there is also a provider fee that is paid whenever a compute job is run, which is set as a price per minute.

The download and compute fees can both be set to any absolute amount and you can also decide which token you want to receive the fees in — they don’t have to be in the same currency used in the consuming market. So for example, the provider fee could be a fixed rate of 5 USDT per 1000 MB of data downloaded, and this fee remains fixed in USDT even if the marketplace is using a completely different currency.

Additionally, provider fees are not limited to data consumption — they can also be used to charge for compute resources. So, for example, this means a provider can charge a fixed fee of 15 DAI to reserve compute resources for 1 hour. This has a huge upside for both the user and the provider host. From the user’s perspective, this means that they can now reserve a suitable amount of compute resources according to what they require. For the host of the provider, this presents another great opportunity to create an income.

Benefits to the Ocean Community We’re always looking to give back to the Ocean community and collecting fees is an important part of that. As mentioned above, the Ocean Protocol Foundation retains the ability to implement community fees on data consumption. The tokens that we receive will either be burned or invested in the community via projects that they are building. These investments will take place either through , , or Ocean Ventures.

Projects that utilize OCEAN or H2O are subject to a 0.1% fee. In the case of projects that opt to use different tokens, an additional 0.1% fee will be applied. We want to support marketplaces that use other tokens but we also recognize that they don’t bring the same wider benefit to the Ocean community, so we feel this small additional fee is proportionate.

To proceed with compute-to-data job creation, the prerequisite is

to select the preferred environment to run the algorithm on it. This can be

accomplished by running the CLI command getComputeEnvironments likewise:

Initiating a compute job can be accomplished through two primary methods.

The first approach involves publishing both the dataset and algorithm, as explained in the previous section, Once that's completed, you can proceed to initiate the compute job.

Alternatively, you have the option to explore available datasets and algorithms and kickstart a compute-to-data job by combining your preferred choices.

To illustrate the latter option, you can use the following command:

In this command, replace DATASET_DID with the specific DID of the dataset you intend to utilize and ALGO_DID with the DID of the algorithm you want to apply. By executing this command, you'll trigger the initiation of a compute-to-data job that harnesses the selected dataset and algorithm for processing.

For running the algorithms free by starting a compute job, these are the following steps.Note

Only for free start compute, the dataset is not mandatory for user to provide in the command line. The required command line parameters are the algorithm DID and environment ID, retrieved from getComputeEnvironments

command.

The first step involves publishing the algorithm, as explained in the previous section, Once that's completed, you can proceed to initiate the compute job.

Alternatively, you have the option to explore available algorithms and kickstart a free compute-to-data job by combining your preferred choices.

To illustrate the latter option, you can use the following command for running free start compute with additional datasets:

In this command, replace DATASET_DID with the specific DID of the dataset you intend to utilize and ALGO_DID with the DID of the algorithm you want to apply and the environment for free start compute returned from npm run cli getComputeEnvironments.

By executing this command, you'll trigger the initiation of a free compute-to-data job with the alogithm provided.

Free start compute can be run without published datasets, only the algorithm and environment is required:

NOTE: For zsh console, please surround [] with quotes like this: "[]".

To obtain the compute results, we'll follow a two-step process. First, we'll employ the `getJobStatus`` method, patiently monitoring its status until it signals the job's completion. Afterward, we'll utilize this method to acquire the actual results.

To monitor the algorithm logs execution and setup configuration for algorithm, this command does the trick!

To track the status of a job, you'll require both the dataset DID and the compute job DID. You can initiate this process by executing the following command:

Executing this command will allow you to observe the job's status and verify its successful completion.

For the second method, the dataset DID is no longer required. Instead, you'll need to specify the job ID, the index of the result you wish to download from the available results for that job, and the destination folder where you want to save the downloaded content. The corresponding command is as follows:

Compute to data version 2 (C2dv2)

Certain datasets, such as health records and personal information, are too sensitive to be directly sold. However, Compute-to-Data offers a solution that allows you to monetize these datasets while keeping the data private. Instead of selling the raw data itself, you can offer compute access to the private data. This means you have control over which algorithms can be run on your dataset. For instance, if you possess sensitive health records, you can permit an algorithm to calculate the average age of a patient without revealing any other details.

Compute-to-Data effectively resolves the tradeoff between leveraging the benefits of private data and mitigating the risks associated with data exposure. It enables the data to remain on-premise while granting third parties the ability to perform specific compute tasks on it, yielding valuable results like statistical analysis or AI model development.

Private data holds immense value as it can significantly enhance research and business outcomes. However, concerns regarding privacy and control often impede its accessibility. Compute-to-Data addresses this challenge by granting specific access to the private data without directly sharing it. This approach finds utility in various domains, including scientific research, technological advancements, and marketplaces where private data can be securely sold while preserving privacy. Companies can seize the opportunity to monetize their data assets while ensuring the utmost protection of sensitive information.

Private data has the potential to drive groundbreaking discoveries in science and technology, with increased data improving the predictive accuracy of modern AI models. Due to its scarcity and the challenges associated with accessing it, private data is often regarded as the most valuable. By utilizing private data through Compute-to-Data, significant rewards can be reaped, leading to transformative advancements and innovative breakthroughs.

We suggest reading these guides to get an understanding of how compute-to-data works:

Earn $, track data & compute provenance, and get more data

It offers three main benefits:

Earn. You can earn $ by doing crypto price predictions via Predictoor, by curating data in Data Farming, competing in a data challenge, and by selling data & models.

More Data. Use to access private data to run your AI modeling algorithms against, data which was previously inaccessible. Browse and other Ocean-powered markets to find more data to improve your AI models.

Provenance. The acts of publishing data, purchasing data, and consuming data are all recorded on the blockchain to make a tamper-proof audit trail. Know where your AI training data came from!

Here are the most relevant Ocean tools to work with:

The library is built for the key environment of data scientists: Python. It can simply be imported alongside other Python data science tools like numpy, matplotlib, scikit-learn and tensorflow. You can use it to publish & sell data assets, buy assets, transfer ownership, and more.

Predictoor's has Python-based tools to run bots for crypto prediction or trading.

, or .

Yes. This section has two other pages which elaborate:

lays out the life cycle of data, and how to focus towards high-value use cases.

helps think about pricing data.

The blog post elaborates further on the benefits of more data, provenance, and earning.

Use these steps to reveal the information contained within an asset's DID and list the buyers of a datatoken

If you are given an Ocean Market link, then the network and datatoken address for the asset is visible on the Ocean Market webpage. For example, given this asset's Ocean Market link: https://odc.oceanprotocol.com/asset/did:op:1b26eda361c6b6d307c8a139c4aaf36aa74411215c31b751cad42e59881f92c1 the webpage shows that this asset is hosted on the Mumbai network, and one simply clicks the datatoken's hyperlink to reveal the datatoken's address as shown in the screenshot below:

You can access all the information for the Ocean Market asset also by enabling Debug mode. To do this, follow these steps:

Step 1 - Click the Settings button in the top right corner of the Ocean Market

Step 2 - Check the Activate Debug Mode box in the dropdown menu

Step 3 - Go to the page for the asset you would like to examine, and scroll through the DDO information to find the NFT address, datatoken address, chain ID, and other information.

If you know the DID:op but you don't know the source link, then you can use Ocean Aquarius to resolve the metadata for the DID:op to find the chainId+ datatoken address of the asset. Simply enter in your browser "<your did:op:XXX>" to fetch the metadata.

For example, for the following DID:op: "did:op:1b26eda361c6b6d307c8a139c4aaf36aa74411215c31b751cad42e59881f92c1" the Ocean Aquarius URL can be modified to add the DID:op and resolve its metadata. Simply add "" to the beginning of the DID:op and enter the link in your browser like this:

Here are the networks and their corresponding chain IDs:

🧑🏽💻 Remote Development Environment for Ocean Protocol

This article points out an alternative for configuring remote networks on Ocean Protocol components: the libraries, Provider, Aquarius, Subgraph, without using Barge services.

Ocean Protocol's smart contracts are deployed on EVM-compatible networks. Using an API key provided by a third-party Ethereum node provider allows you to interact with the Ocean Protocol's smart contracts on the supported networks without requiring you to host a local node.

Choose any API provider of your choice. Some of the commonly used are:

The supported networks are listed .

Let's configure the remote setup for the mentioned components in the following sections.

The Uploader represents a cutting-edge solution designed to streamline the upload process within a decentralized network. Built with efficiency and scalability in mind, Uploader leverages advanced technologies to provide secure, reliable, and cost-effective storage solutions to users.

Once you've configured the RPC environment variable, you're ready to publish a new dataset on the connected network. The flexible setup allows you to switch to a different network simply by substituting the RPC endpoint with one corresponding to another network. 🌐

For setup configuration on Ocean CLI, please consult first

To initiate the dataset publishing process, we'll start by updating the helper (Decentralized Data Object) example named "SimpleDownloadDataset.json." This example can be found in the ./metadata folder, located at the root directory of the cloned Ocean CLI project.

The new Ocean stack

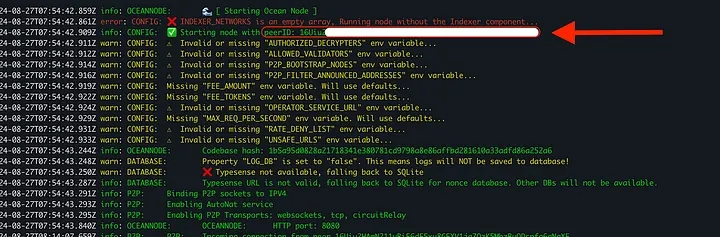

Ocean Nodes are a vital part of the Ocean Protocol core technology stack. The Ocean Nodes monorepo that replaces the three previous components: , and . It has been designed to significantly simplify the process of starting the Ocean stack - it runs everything you need with one simple command.

It integrates multiple services for secure and efficient data operations, utilizing technologies like libp2p for peer-to-peer communication. Its modular and scalable architecture supports various use cases, from simple data retrieval to complex compute-to-data (C2D) tasks.

The node is structured into separate layers, including the network layer for communication, and the components layer for core services like the Indexer and Provider. This layered architecture ensures efficient data management and high security.

Flexibility and extensibility are key features of Ocean Node, allowing multiple compute engines, such as Docker and Kubernetes, to be managed within the same framework. The orchestration layer coordinates interactions between the core node and execution environments, ensuring the smooth operation of compute tasks.

For details on how to run a node see the in the GitHub repository.

However, your nodes must meet specific criteria in order to be eligible for incentives. Here’s what’s required:

After creating DDO instance based on DDO's version, we can interact with the DDO fields through the following methods:

getDDOFields() which returns DDO fields such as:

id: The Decentralized Identifier (DID) of the asset.

version

Provider offers an alternative to signing each request, by allowing users to generate auth tokens. The generated auth token can be used until its expiration in all supported requests. Simply omit the signature parameter and add the AuthToken request header based on a created token.

Please note that if a signature parameter exists, it will take precedence over the AuthToken headers. All routes that support a signature parameter support the replacement, with the exception of auth-related ones (createAuthToken and deleteAuthToken need to be signed).

Endpoint: GET /api/services/createAuthToken

Explore and manage the revenue generated from your data NFTs.

Having a that generates revenue continuously, even when you're not actively involved, is an excellent source of income. This revenue stream allows you to earn consistently without actively dedicating your time and effort. Each time someone buys access to your NFT, you receive money, further enhancing the financial benefits. This steady income allows you to enjoy the rewards of your asset while minimizing the need for constant engagement💰

By default, the revenue generated from a is directed to the of the NFT. This arrangement automatically updates whenever the data NFT is transferred to a new owner.

However, there are scenarios where you may prefer the revenue to be sent to a different account instead of the owner. This can be accomplished by designating a new payment collector. This feature becomes particularly beneficial when the data NFT is owned by an organization or enterprise rather than an individual.

To get started with the Ocean CLI, follow these steps for a seamless setup:

Begin by cloning the repository. You can achieve this by executing the following command in your terminal:

Cloning the repository will create a local copy on your machine, allowing you to access and work with its contents.

Python library to privately & securely publish, exchange, and consume data.

helps data scientists earn $ from their AI models, track provenance of data & compute, and get more data. (More details .)

Ocean.py makes these tasks easy:

Publish data services: data feeds, REST APIs, downloadable files or compute-to-data. Create an ERC721 data NFT for each service, and ERC20 datatoken for access (1.0 datatokens to access).

Sell datatokens via for a fixed price. Sell data NFTs.

{

"plugin": "elasticsearch",

"software": "Aquarius",

"version": "4.2.0"

}curl --location --request GET 'https://v4.aquarius.oceanprotocol.com/'curl --location --request GET 'https://v4.aquarius.oceanprotocol.com/health'curl --location --request GET 'https://v4.aquarius.oceanprotocol.com/spec'npm run cli getComputeEnvironments

A local Ethereum blockchain network for testing and development purposes.

TheGraph

A decentralized indexing and querying protocol used for building subgraphs in Ocean Protocol.

ocean-contracts

Smart contracts repository for Ocean Protocol. Deploys and manages the necessary contracts for local development.

Customization and Options

Barge provides various options to customize component versions, log levels, and enable/disable specific blocks.

git clone [email protected]:oceanprotocol/barge.git